We recently realized the free space value on a single datastore in our VMware datastore cluster wasn’t matching the value of free space we were seeing on the backing device sitting on your VPLEX arrays. Not even close, as a matter of fact the underlying VLPEX distributed volume was fully utilized.

This clearly suggested something was going wrong with the deleted block unmap process.

To troubleshoot I jumped on a ESX host which has access to the particular datastore and run some esxcli cmds.

NOTE – You will need the naa number of backing device to run these cmds.

I first run the following cmd to get some details on the backing device.

esxcli storage core device list -d naa.60001440000000XXXXXXXXXXXXXXXXX

Display Name: EMC Fibre Channel Disk (naa.60001440000000XXXXXXXXXXXXXXXXX)

Has Settable Display Name: true

Size:

Device Type: Direct-Access

Multipath Plugin: NMP

Devfs Path: /vmfs/devices/disks/naa.60001440000000XXXXXXXXXXXXXXXXX

Vendor: EMC

Model:

Revision: 6200

SCSI Level: 4

Is Pseudo: false

Status: on

Is RDM Capable: true

Is Local: false

Is Removable: false

Is SSD: false

Is VVOL PE: false

Is Offline: false

Is Perennially Reserved: false

Queue Full Sample Size: 0

Queue Full Threshold: 0

Thin Provisioning Status: unknown

Attached Filters:

VAAI Status: supported

Other UIDs: vml.02002601000001440000000XXXXXXXXXXXXXXXXXXXXXXXXXXXXX

Is Shared Clusterwide: true

Is SAS: false

Is USB: false

Is Boot Device: false

Device Max Queue Depth: 32

No of outstanding IOs with competing worlds: 32

Drive Type: unknown

RAID Level: unknown

Number of Physical Drives: unknown

Protection Enabled: false

PI Activated: false

PI Type: 0

PI Protection Mask: NO PROTECTION

Supported Guard Types: NO GUARD SUPPORT

DIX Enabled: false

DIX Guard Type: NO GUARD SUPPORT

Emulated DIX/DIF Enabled: false

The bit of this dump you need to pay attention to is the Thin Provisioning Status – which is set to unknown!

I then ran the following cmd to find out the vaai status of backing device.

esxcli storage core device vaai status get -d naa.60001440000000XXXXXXXXXXXXXXXXX

VAAI Plugin Name:

ATS Status: supported

Clone Status: supported

Zero Status: supported

Delete Status: unsupported

And as we can see the Delete Status is set to unsupported, meaning auto unmap for this datastore won’t work.

Delete Status is unsupported because the backing device is considered as a Thick Provisioned volume, i.e. Thin Provisioning Status: unknown.

So I jumped on the VPLEX and run the following cmds under vplexcli.

VPlexcli:/clusters/cluster-1/virtual-volumes/VOLUME NAME> ll

Name Value

-------------------------- ----------------------------------------

block-count 1073741824

block-size 4K

cache-mode synchronous

capacity 4T

consistency-group CG_XXX

expandable true

expandable-capacity 0B

expansion-method storage-volume

expansion-status -

health-indications []

health-state ok

initialization-status -

locality distributed

operational-status ok

recoverpoint-protection-at []

recoverpoint-usage -

scsi-release-delay 0

service-status running

storage-array-family

storage-tier -

supporting-device dd_VOLUME NAME

system-id VOLUME NAME

thin-capable true

thin-enabled disabled

volume-type virtual-volume

vpd-id VPD83T3:60001440000000XXXXXXXXXXXXXXXXX

Oh no! The thin-enabled feature of volume is set to disabled.

To enable this feature ran the following cmd.

VPlexcli:/clusters/cluster-1/virtual-volumes/VOLUME NAME>set thin-enabled true

Then carried out a rescan on the ESX host and run the same esxcli cmd to check the vaai status.

esxcli storage core device vaai status get -d naa.60001440000000XXXXXXXXXXXXXXXXX

VAAI Plugin Name:

ATS Status: supported

Clone Status: supported

Zero Status: supported

Delete Status: supported

And the Delete Status changed to supported!

I then cranked up the reclaim rate to 2000 MB/s and in no time the unused datastore blocks were claimed back by the VPLEX volume.

To make sure reclaim process was actually taking place I used esxtop and vsish.

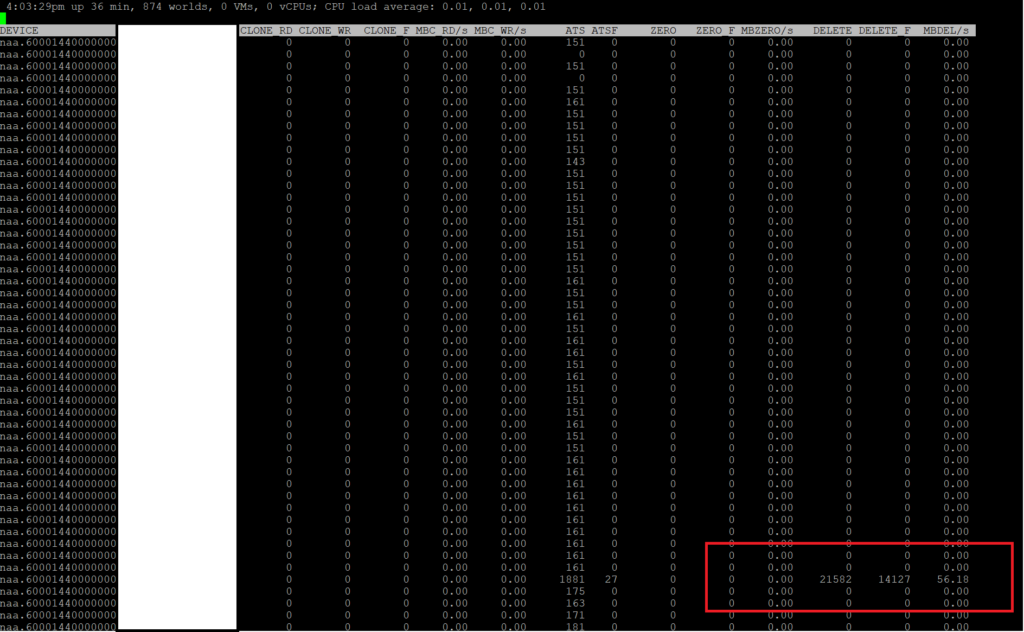

Enter esxtop under esxcli then type “u” then “f” then select VAAI statistics with “o”.

Watch the two DELETE counters and MBDEL/s.

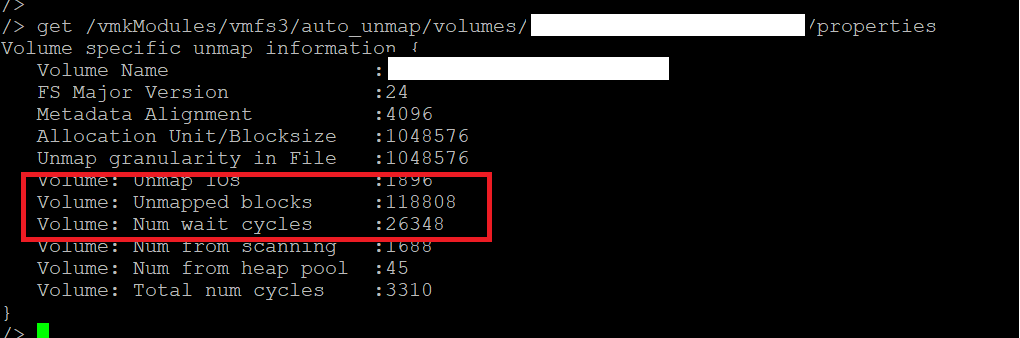

Enter vsish under esxcli then type following cmd.

get /vmkModules/vmfs3/auto_unmap/volumes/VOLUME NAME/properties